|

Python

# pcb_defect_detection_npu.py

import torch

import torch.nn as nn

import torch.optim as optim

import torchvision.transforms as transforms

from torch.utils.data import Dataset, DataLoader

import cv2

import numpy as np

from PIL import Image

import time

# 启用CANN的混合精度训练,大幅提升NPU计算效率

from torch.npu.amp import autocast, GradScaler

class PCBDefectDataset(Dataset):

"""PCB缺陷检测数据集"""

def __init__(self, num_samples=1000, img_size=224):

self.num_samples = num_samples

self.img_size = img_size

self.defect_types = ['missing_hole', 'mouse_bite', 'open_circuit',

'short', 'spur', 'spurious_copper']

self.transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

])

def __len__(self):

return self.num_samples

def generate_pcb_image(self):

"""生成模拟PCB图像"""

# 创建绿色PCB底板

img = np.ones((self.img_size, self.img_size, 3), dtype=np.uint8) * 60

img[:, :, 1] = 120 # 增强绿色通道

# 绘制基础电路

cv2.rectangle(img, (30, 30), (180, 80), (200, 150, 80), 3) # 焊盘

cv2.line(img, (50, 100), (150, 100), (180, 130, 70), 2) # 线路

cv2.circle(img, (100, 150), 15, (200, 150, 80), -1) # 过孔

return img

def add_defect(self, img, defect_type):

"""添加特定类型的缺陷"""

if defect_type == 'missing_hole':

# 漏孔:应该有孔的地方没有孔

pass # 这里我们故意不画某个孔

elif defect_type == 'mouse_bite':

# 鼠咬:线路边缘被咬掉

cv2.circle(img, (120, 100), 8, (60, 120, 60), -1)

elif defect_type == 'open_circuit':

# 开路:线路断开

cv2.line(img, (50, 100), (90, 100), (180, 130, 70), 2)

cv2.line(img, (110, 100), (150, 100), (180, 130, 70), 2)

elif defect_type == 'short':

# 短路:不该连接的地方连接

cv2.line(img, (80, 90), (80, 110), (220, 100, 60), 2)

elif defect_type == 'spur':

# 毛刺:线路上的凸起

points = np.array([[140, 95], [150, 90], [155, 100]], np.int32)

cv2.fillPoly(img, [points], (180, 130, 70))

elif defect_type == 'spurious_copper':

# 伪铜:多余的铜箔

cv2.circle(img, (60, 60), 10, (180, 140, 90), -1)

return img

def __getitem__(self, idx):

# 随机选择缺陷类型(10%的概率为正常样本)

if idx % 10 == 0:

defect_type = 'normal'

label = 6 # 正常样本标签为6

else:

defect_type = self.defect_types[idx % len(self.defect_types)]

label = self.defect_types.index(defect_type)

# 生成图像

img = self.generate_pcb_image()

if defect_type != 'normal':

img = self.add_defect(img, defect_type)

# 应用变换

img = Image.fromarray(img)

img = self.transform(img)

return img, label

class FastDefectDetector(nn.Module):

"""轻量级缺陷检测模型(针对NPU优化)"""

def __init__(self, num_classes=7):

super(FastDefectDetector, self).__init__()

# 特征提取主干网络 - 使用适合NPU的卷积配置

self.features = nn.Sequential(

nn.Conv2d(3, 32, kernel_size=3, stride=2, padding=1),

nn.BatchNorm2d(32),

nn.ReLU(inplace=True),

nn.Conv2d(32, 64, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2),

nn.Conv2d(64, 128, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(128),

nn.ReLU(inplace=True),

nn.Conv2d(128, 256, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(256),

nn.ReLU(inplace=True),

nn.AdaptiveAvgPool2d((1, 1))

)

# 分类器

self.classifier = nn.Sequential(

nn.Dropout(0.2),

nn.Linear(256, 128),

nn.ReLU(inplace=True),

nn.Linear(128, num_classes)

)

def forward(self, x):

x = self.features(x)

x = x.view(x.size(0), -1)

x = self.classifier(x)

return x

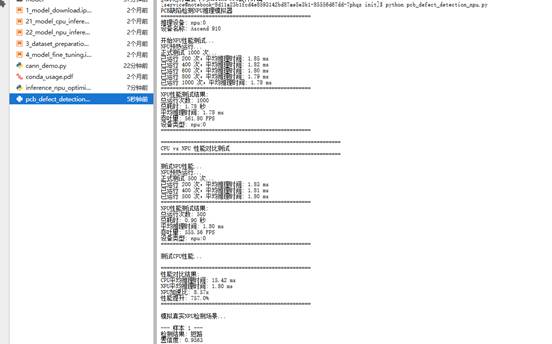

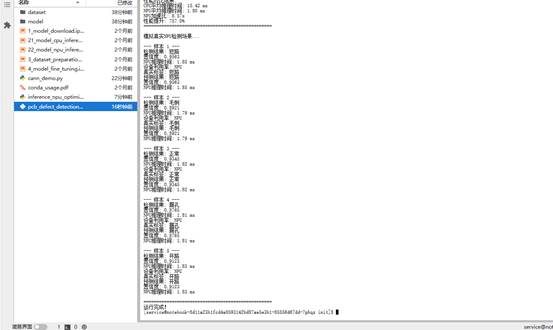

def train_model_npu():

"""在NPU上训练模型(使用混合精度)"""

print("开始训练PCB缺陷检测模型(NPU加速)...")

# 设置NPU设备

device = torch.device('npu:0')

print(f"使用设备: {device}")

print(f"设备名称: {torch.npu.get_device_name(0)}")

# 准备数据

dataset = PCBDefectDataset(num_samples=1000)

train_loader = DataLoader(dataset, batch_size=32, shuffle=True)

# 初始化模型并转移到NPU

model = FastDefectDetector(num_classes=7).to(device)

criterion = nn.CrossEntropyLoss().to(device)

optimizer = optim.Adam(model.parameters(), lr=0.001)

# NPU混合精度训练关键:GradScaler

scaler = GradScaler()

# 训练循环

model.train()

for epoch in range(20):

total_loss = 0

correct = 0

total = 0

for batch_idx, (data, target) in enumerate(train_loader):

data, target = data.to(device), target.to(device)

optimizer.zero_grad()

# 使用混合精度前向传播

with autocast():

output = model(data)

loss = criterion(output, target)

# 使用scaler进行反向传播

scaler.scale(loss).backward()

scaler.step(optimizer)

scaler.update()

total_loss += loss.item()

_, predicted = output.max(1)

total += target.size(0)

correct += predicted.eq(target).sum().item()

accuracy = 100. * correct / total

avg_loss = total_loss / len(train_loader)

print(f'Epoch [{epoch+1}/20], Loss: {avg_loss:.4f}, Accuracy: {accuracy:.2f}%')

# 保存模型

torch.save(model.state_dict(), 'pcb_defect_model_npu.pth')

print("NPU模型训练完成,已保存为 pcb_defect_model_npu.pth")

return model

if __name__ == "__main__":

# 设置默认NPU设备

torch.npu.set_device(0)

model = train_model_npu() |

已为社区贡献4条内容

已为社区贡献4条内容

所有评论(0)