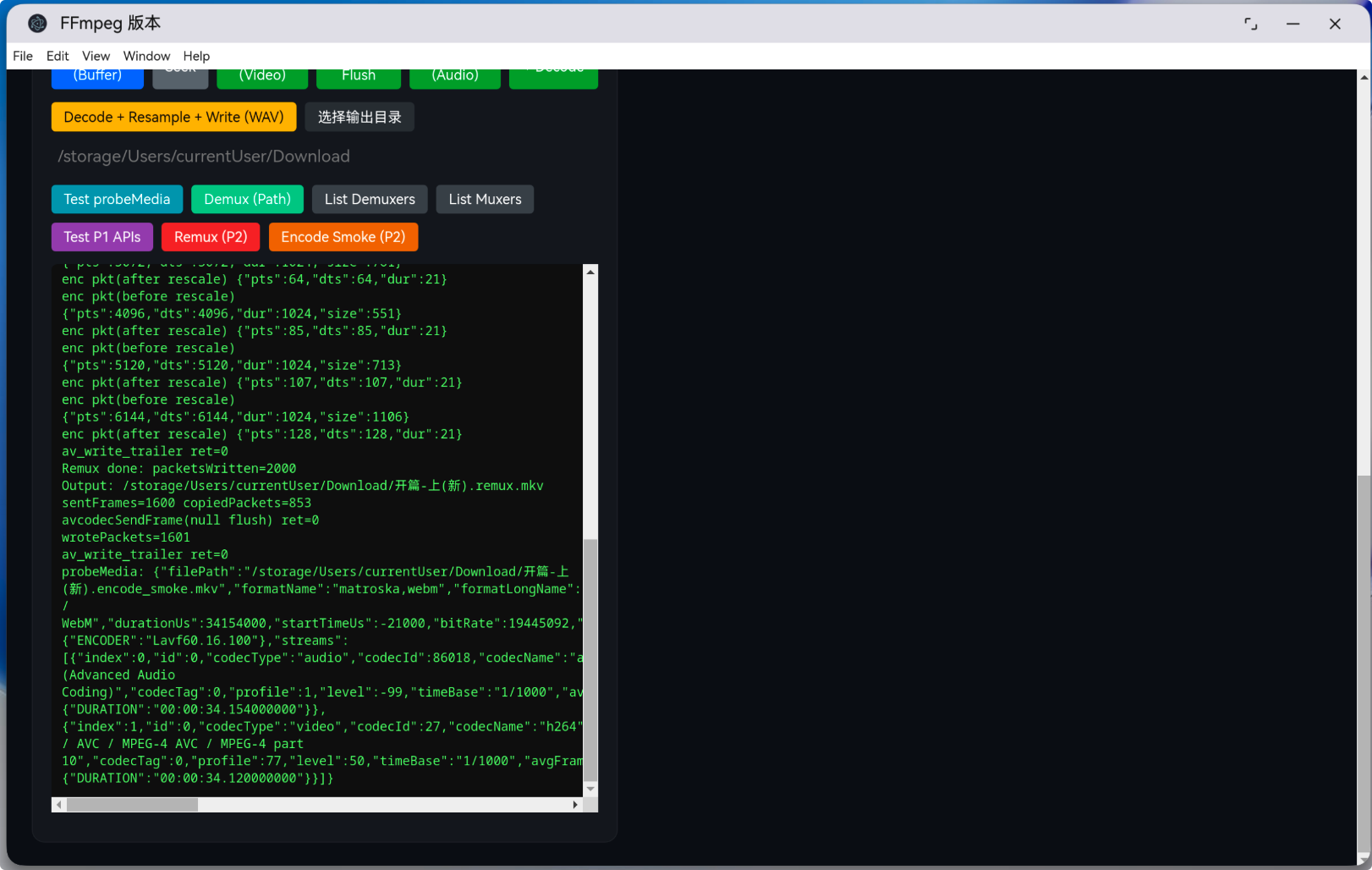

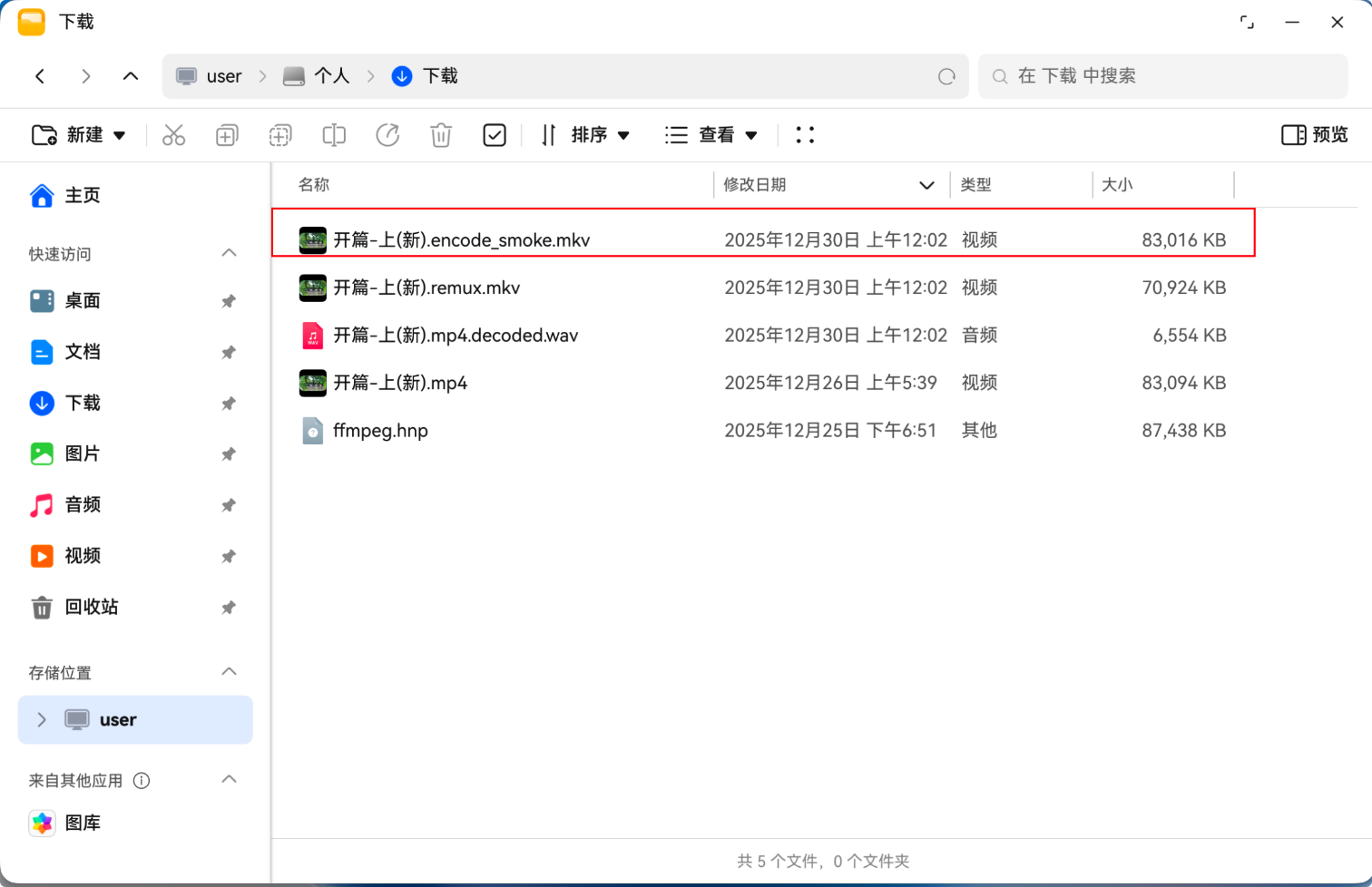

鸿蒙PC上FFmpeg+Electron的Encode Smoke(P2) 排错实录:从“无法播放/时长为 0”到“保留画面且转完整时长”

本文记录了在鸿蒙PC上基于Electron+FFmpeg的媒体转码链路开发过程中遇到的典型问题及解决方案。通过分析错误现象、排查根因并给出修复代码,最终实现了音频重编码+视频流复制的完整转码功能。主要问题包括:编码器缓冲处理不当导致EAGAIN错误、输出路径拼写错误、时间戳不连续引发的时长异常、流处理不完整等。修复方案涉及引入编码器drain机制、路径规范化、连续PTS生成、多流处理等关键改进。文

·

基于仓库内 Electron + ffmpeg_addon(Node/N-API)+ Renderer 页面触发的最小转码链路,记录一次真实排错过程:错误信息、触发代码、定位路径、修复代码,以及最终可运行示例。

1. 背景与目标

项目中提供了一个按钮 Encode Smoke (P2),用来验证一条最小可闭环链路:

- 输入:用户选择媒体文件(在 renderer 侧读取为 buffer)

- 处理:FFmpeg 解复用 → 解码(音频)→ 编码(AAC)→ 封装(MKV)

- 输出:落盘

.mkv,并用probeMedia回读验证时长/流信息

最初目标是验证“能跑通”,后来逐步升级到“输出可播放 + 时长正确 + 保留视频画面 + 完整时长”。

2. 系统结构图(数据与控制路径)

用户点击按钮 → Renderer

↓

Renderer → preload.js (contextBridge)

↓

preload.js → main.js (ipcMain)

↓

main.js → ffmpeg_addon.node (N-API)

↓

ffmpeg_addon.node → FFmpeg

↓

FFmpeg → 输出 .mkv 文件

↑

Renderer → preload.js (probeMedia)

关键点:Renderer 不直接链接 FFmpeg,所有原生调用都通过 window.ffmpeg → IPC → addon → FFmpeg。

3. “解复用/解码/编码/封装”时序图(核心流程)

4. 报错信息与对应根因(按时间顺序)

4.1 avcodecSendFrame ret=-11(EAGAIN)

现象

- 日志:

enc avcodecSendFrame ret=-11

根因

-11基本可视作AVERROR(EAGAIN):编码器内部缓冲满,必须先receive_packet把编码器已产出的包取出来并写走,再继续send_frame。

定位到的“报错代码形态”(问题点)

- 只做了一次

send_frame,没有 drain:

const sf = await window.ffmpeg.avcodecSendFrame(encCtx, frame);

if (sf < 0) throw new Error(`sendFrame failed: ${sf}`);

修复方式(排错代码)

- 引入

drainEncoder():循环avcodecReceivePacket,对包做rescale_ts、设置stream_index/pos,然后avInterleavedWriteFrame。 - 当

send_frame返回-11时:先drainEncoder(),再重试send_frame。

4.2 drainEncoder is not defined

现象

- 日志:

Error: drainEncoder is not defined

根因

- 作用域问题:函数未放在

testEncodeP2Smoke()可见范围内(或构建产物/缓存导致 renderer 侧没拿到最新定义)。

修复方式

- 把

drainEncoder()放进testEncodeP2Smoke()内部,使用同一组encCtx/outPkt/outCtx/outStreamIndex变量。

4.3 avFormatAvioOpen2 ret=-20(ENOTDIR)

现象

- 日志形态常见为:输出路径被拼成

xxx.mp4/yyy.mkv avFormatAvioOpen2 ret=-20

根因

- 选择输出目录时传入了“文件路径”而非“目录路径”,后续代码按目录拼接输出文件名,导致中间出现

...mp4/这样的非法目录结构。

修复方式

- 引入输出目录规范化:若最后一段带媒体扩展名,则截断到父目录。

4.4 “导出的 MKV 时长为 0”

现象

- 文件可生成,但播放器显示

0s,或ffprobe/probeMedia返回异常 duration。

根因(高频)

- PTS/DTS 不连续或为空;

- 编码器/输出流的

time_base不一致且未做 rescale; - 音频解码得到的

frame.pts可能为NOPTS,需要自行补齐。

修复方式

- 以“采样点数”生成连续 PTS:维护

nextPtsSamples,每帧设置frame.pts = nextPtsSamples,然后nextPtsSamples += nb_samples(取不到则用 1024 兜底)。 - 这会让编码器输出的 AAC packet pts/dts 连续递增,从而容器能计算出正确 duration。

- 同时打印包时间戳前后变化,快速确认 rescale 是否正确。

4.5 “转出来只有 4 秒、没有画面”

现象(来自一次验证日志)

sentFrames=200probeMedia.durationUs=4287000(约 4.287s)nbStreams=1且仅 audio stream

根因

- 当时 smoke 只处理了“最佳音频流”,没有把视频流写入输出;因此必然“无画面”。

- 同时为了排查时长临时限制了编码帧数

200,48kHz 下约 4.27s,和 probe 结果吻合。

修复方式

- Encode Smoke 升级为“音频重编码 + 其它流 remux”:

- 最佳音频流:解码→编码→写入

- 其它流(通常包含视频):复制

codecpar创建输出流,rescale 时间戳并直接写入

- 读取 packet 循环直到 EOF,不再限制 200 帧

5. 错误码对照表(便于排障时快速定位)

| 返回值 | 常见宏/含义 | 通常原因 | 处理策略 |

|---|---|---|---|

| -11 | AVERROR(EAGAIN) |

编码器/解码器需要你先 drain | 循环 receive(frame/packet),写走后再重试 send |

| -20 | AVERROR(ENOTDIR) |

路径拼错,把文件当目录 | 规范化输出目录/拼接逻辑 |

| -1094995529 | 常见为 AVERROR_INVALIDDATA |

写头数据不完整/参数不对 | 确认输出流 codecpar/time_base、容器支持情况 |

6. 最终可运行示例代码(与仓库一致)

以下代码均来自当前仓库真实文件。

6.1 main.js:增加 PTS/DTS/DURATION 的 set IPC

ipcMain.handle('ffmpeg:AVFrame_pts_get', async (_event, frame) => {

const addon = loadFFmpegAddon();

return addon.AVFrame_pts_get(frame);

});

ipcMain.handle('ffmpeg:AVFrame_pts_set', async (_event, frame, pts) => {

const addon = loadFFmpegAddon();

return addon.AVFrame_pts_set(frame, pts);

});

ipcMain.handle('ffmpeg:AVFrame_pkt_dts_get', async (_event, frame) => {

const addon = loadFFmpegAddon();

return addon.AVFrame_pkt_dts_get(frame);

});

ipcMain.handle('ffmpeg:AVFrame_key_frame_get', async (_event, frame) => {

const addon = loadFFmpegAddon();

return addon.AVFrame_key_frame_get(frame);

});

ipcMain.handle('ffmpeg:AVFrame_pict_type_get', async (_event, frame) => {

const addon = loadFFmpegAddon();

return addon.AVFrame_pict_type_get(frame);

});

ipcMain.handle('ffmpeg:AVFrame_sample_rate_get', async (_event, frame) => {

const addon = loadFFmpegAddon();

return addon.AVFrame_sample_rate_get(frame);

});

ipcMain.handle('ffmpeg:AVFrame_nb_samples_get', async (_event, frame) => {

const addon = loadFFmpegAddon();

return addon.AVFrame_nb_samples_get(frame);

});

ipcMain.handle('ffmpeg:AVFrame_channels_get', async (_event, frame) => {

const addon = loadFFmpegAddon();

return addon.AVFrame_channels_get(frame);

});

ipcMain.handle('ffmpeg:AVFrame_channel_layout_get', async (_event, frame) => {

const addon = loadFFmpegAddon();

return addon.AVFrame_channel_layout_get(frame);

});

// --- Accessor IPC Handlers (Example for Packet properties) ---

ipcMain.handle('ffmpeg:AVPacket_size_get', async (_event, pkt) => {

const addon = loadFFmpegAddon();

return addon.AVPacket_size_get(pkt);

});

ipcMain.handle('ffmpeg:AVPacket_stream_index_get', async (_event, pkt) => {

const addon = loadFFmpegAddon();

return addon.AVPacket_stream_index_get(pkt);

});

ipcMain.handle('ffmpeg:AVPacket_stream_index_set', async (_event, pkt, idx) => {

const addon = loadFFmpegAddon();

return addon.AVPacket_stream_index_set(pkt, idx);

});

ipcMain.handle('ffmpeg:AVPacket_pts_get', async (_event, pkt) => {

const addon = loadFFmpegAddon();

return addon.AVPacket_pts_get(pkt);

});

ipcMain.handle('ffmpeg:AVPacket_pts_set', async (_event, pkt, pts) => {

const addon = loadFFmpegAddon();

return addon.AVPacket_pts_set(pkt, pts);

});

ipcMain.handle('ffmpeg:AVPacket_dts_get', async (_event, pkt) => {

const addon = loadFFmpegAddon();

return addon.AVPacket_dts_get(pkt);

});

ipcMain.handle('ffmpeg:AVPacket_dts_set', async (_event, pkt, dts) => {

const addon = loadFFmpegAddon();

return addon.AVPacket_dts_set(pkt, dts);

});

ipcMain.handle('ffmpeg:AVPacket_flags_get', async (_event, pkt) => {

const addon = loadFFmpegAddon();

return addon.AVPacket_flags_get(pkt);

});

ipcMain.handle('ffmpeg:AVPacket_duration_get', async (_event, pkt) => {

const addon = loadFFmpegAddon();

return addon.AVPacket_duration_get(pkt);

});

ipcMain.handle('ffmpeg:AVPacket_duration_set', async (_event, pkt, duration) => {

const addon = loadFFmpegAddon();

return addon.AVPacket_duration_set(pkt, duration);

});

6.2 preload.js:暴露 setFramePts 等 API 到 window.ffmpeg

// Frame APIs

avFrameAlloc: () => ipcRenderer.invoke('ffmpeg:av_frame_alloc'),

avFrameFree: (frame) => ipcRenderer.invoke('ffmpeg:av_frame_free', frame),

getFrameWidth: (frame) => ipcRenderer.invoke('ffmpeg:AVFrame_width_get', frame),

getFrameHeight: (frame) => ipcRenderer.invoke('ffmpeg:AVFrame_height_get', frame),

getFrameFormat: (frame) => ipcRenderer.invoke('ffmpeg:AVFrame_format_get', frame),

getFramePts: (frame) => ipcRenderer.invoke('ffmpeg:AVFrame_pts_get', frame),

setFramePts: (frame, pts) => ipcRenderer.invoke('ffmpeg:AVFrame_pts_set', frame, pts),

getFrameSampleRate: (frame) => ipcRenderer.invoke('ffmpeg:AVFrame_sample_rate_get', frame),

getFrameNbSamples: (frame) => ipcRenderer.invoke('ffmpeg:AVFrame_nb_samples_get', frame),

getFrameChannels: (frame) => ipcRenderer.invoke('ffmpeg:AVFrame_channels_get', frame),

getFrameChannelLayout: (frame) => ipcRenderer.invoke('ffmpeg:AVFrame_channel_layout_get', frame),

// Accessors

getPacketSize: (pkt) => ipcRenderer.invoke('ffmpeg:AVPacket_size_get', pkt),

getPacketStreamIndex: (pkt) => ipcRenderer.invoke('ffmpeg:AVPacket_stream_index_get', pkt),

setPacketStreamIndex: (pkt, idx) => ipcRenderer.invoke('ffmpeg:AVPacket_stream_index_set', pkt, idx),

getPacketPts: (pkt) => ipcRenderer.invoke('ffmpeg:AVPacket_pts_get', pkt),

setPacketPts: (pkt, pts) => ipcRenderer.invoke('ffmpeg:AVPacket_pts_set', pkt, pts),

getPacketDts: (pkt) => ipcRenderer.invoke('ffmpeg:AVPacket_dts_get', pkt),

setPacketDts: (pkt, dts) => ipcRenderer.invoke('ffmpeg:AVPacket_dts_set', pkt, dts),

getPacketFlags: (pkt) => ipcRenderer.invoke('ffmpeg:AVPacket_flags_get', pkt),

getPacketDuration: (pkt) => ipcRenderer.invoke('ffmpeg:AVPacket_duration_get', pkt),

setPacketDuration: (pkt, duration) => ipcRenderer.invoke('ffmpeg:AVPacket_duration_set', pkt, duration),

getPacketPos: (pkt) => ipcRenderer.invoke('ffmpeg:AVPacket_pos_get', pkt),

setPacketPos: (pkt, pos) => ipcRenderer.invoke('ffmpeg:AVPacket_pos_set', pkt, pos),

6.3 index.html:Encode Smoke(P2) 最终实现(音频重编码 + 视频等流 remux + probe)

async function testEncodeP2Smoke() {

p1ClearLog();

if (!window.ffmpeg) {

p1Log('Error: window.ffmpeg not available');

return;

}

const buf = getSelectedBufferSafe();

if (!buf) {

p1Log('Error: No file selected. Click "Choose File" first.');

return;

}

if (!selectedOutputDir) {

p1Log('Error: No output directory selected. Click "选择输出目录" first.');

return;

}

const required = [

'avFormatOpenInputFromBuffer',

'avFormatFindStreamInfo',

'avFormatFindBestStream',

'avFormatGetStreamCodecpar',

'avCodecParametersGetCodecId',

'avcodecFindEncoder',

'avcodecAllocContext3',

'avcodecParametersToContext',

'avcodecOpen2',

'avcodecParametersFromContext',

'avcodecFindDecoder',

'avFrameAlloc',

'avFrameUnref',

'avFrameFree',

'setFramePts',

'getFrameNbSamples',

'getFrameSampleRate',

'avReadFrame',

'getPacketStreamIndex',

'avPacketUnref',

'avcodecSendPacket',

'avcodecReceiveFrame',

'avcodecSendFrame',

'avcodecReceivePacket',

'avPacketAlloc',

'avPacketFree',

'avPacketRescaleTs',

'avcodecParametersCopy',

'avFormatGetStream',

'AVCodecContext_time_base_get',

'AVStream_time_base_get',

'AVStream_index_get',

'setPacketStreamIndex',

'setPacketPos',

'avFormatAllocOutputContext2',

'avFormatNewStream',

'avStreamGetCodecpar',

'avCodecParametersSetCodecTag',

'avStreamSetTimeBase',

'probeMedia',

'avFormatOutputNeedsFile',

'avFormatAvioOpen2',

'avFormatWriteHeader',

'avInterleavedWriteFrame',

'av_write_trailer',

'avFormatAvioClosep',

'avFormatFreeContext',

'avcodecFreeContext',

'avFormatCloseInput',

];

for (const k of required) {

if (typeof window.ffmpeg[k] !== 'function') {

p1Log(`Error: window.ffmpeg.${k} not available`);

return;

}

}

const outDir = normalizeOutputDir(selectedOutputDir);

const baseName = selectedFileName ? String(selectedFileName).replace(/\.[^/.]+$/, '') : 'input';

const outPath = `${outDir}/${baseName}.encode_smoke.mkv`;

let inCtx = 0n;

let outCtx = 0n;

let outSt = 0n;

let outPar = 0n;

let inPar = 0n;

let enc = 0n;

let encCtx = 0n;

let dec = 0n;

let decCtx = 0n;

let inPkt = 0n;

let outPkt = 0n;

let frame = 0n;

let outStreamIndex = -1;

let outStreamByInIndex = [];

let outStreamIndexByInIndex = [];

try {

p1Log(`Open input(buffer): ${buf.byteLength} bytes`);

const openP = window.ffmpeg.avFormatOpenInputFromBuffer(buf);

inCtx = typeof withTimeout === 'function' ? await withTimeout(openP, 15000, 'avFormatOpenInputFromBuffer') : await openP;

if (!inCtx) {

p1Log('Error: input context is null');

return;

}

const fsRet = await window.ffmpeg.avFormatFindStreamInfo(inCtx, null);

p1Log(`avFormatFindStreamInfo ret=${fsRet}`);

if (fsRet < 0) return;

const bestIdx = await window.ffmpeg.avFormatFindBestStream(inCtx, 1);

p1Log(`avFormatFindBestStream(audio) idx=${bestIdx}`);

if (bestIdx < 0) return;

inPar = await window.ffmpeg.avFormatGetStreamCodecpar(inCtx, Number(bestIdx));

if (!inPar) {

p1Log('Error: input codecpar is null');

return;

}

const codecId = await window.ffmpeg.avCodecParametersGetCodecId(inPar);

p1Log(`input codecId=${codecId}`);

dec = await window.ffmpeg.avcodecFindDecoder(Number(codecId));

p1Log(`avcodecFindDecoder => ${dec}`);

if (!dec) {

p1Log('Decoder not found for input codecId');

return;

}

decCtx = await window.ffmpeg.avcodecAllocContext3(dec);

p1Log(`dec avcodecAllocContext3 => ${decCtx}`);

if (!decCtx) return;

{

const dP2C = await window.ffmpeg.avcodecParametersToContext(decCtx, inPar);

p1Log(`dec avcodecParametersToContext ret=${dP2C}`);

if (dP2C < 0) return;

}

{

const dOpen = await window.ffmpeg.avcodecOpen2(decCtx, dec, null);

p1Log(`dec avcodecOpen2 ret=${dOpen}`);

if (dOpen < 0) return;

}

enc = await window.ffmpeg.avcodecFindEncoder(Number(codecId));

p1Log(`avcodecFindEncoder => ${enc}`);

if (!enc) {

p1Log('Encoder not found for input codecId');

return;

}

p1Log(`Alloc output: ${outPath}`);

outCtx = await window.ffmpeg.avFormatAllocOutputContext2(outPath, null);

if (!outCtx) {

p1Log('Error: output context is null');

return;

}

outSt = await window.ffmpeg.avFormatNewStream(outCtx, enc);

if (!outSt) {

p1Log('Error: avFormatNewStream(audio) failed');

return;

}

outStreamIndex = Number(await window.ffmpeg.AVStream_index_get(outSt));

outStreamByInIndex[Number(bestIdx)] = outSt;

outStreamIndexByInIndex[Number(bestIdx)] = outStreamIndex;

p1Log(`output audio stream index=${outStreamIndex}`);

outPar = await window.ffmpeg.avStreamGetCodecpar(outSt);

if (!outPar) {

p1Log('Error: output audio codecpar is null');

return;

}

encCtx = await window.ffmpeg.avcodecAllocContext3(enc);

p1Log(`avcodecAllocContext3 => ${encCtx}`);

if (!encCtx) return;

const p2c = await window.ffmpeg.avcodecParametersToContext(encCtx, inPar);

p1Log(`avcodecParametersToContext ret=${p2c}`);

const openRet = await window.ffmpeg.avcodecOpen2(encCtx, enc, null);

p1Log(`avcodecOpen2 ret=${openRet}`);

const pfc = await window.ffmpeg.avcodecParametersFromContext(outPar, encCtx);

p1Log(`avcodecParametersFromContext ret=${pfc}`);

try {

await window.ffmpeg.avCodecParametersSetCodecTag(outPar, 0);

} catch (_) {}

let sr = 0;

try {

const v = await window.ffmpeg.AVCodecContext_sample_rate_get(encCtx);

sr = Number(v);

} catch (_) {}

if (sr > 0) {

await window.ffmpeg.avStreamSetTimeBase(outSt, 1, sr);

p1Log(`Set output stream time_base=1/${sr}`);

} else {

await window.ffmpeg.avStreamSetTimeBase(outSt, 1, 1000);

p1Log('Set output stream time_base=1/1000');

}

for (let i = 0; i < 64; i++) {

const inSt = await window.ffmpeg.avFormatGetStream(inCtx, i);

if (!inSt) break;

if (Number(i) === Number(bestIdx)) continue;

const inParI = await window.ffmpeg.avFormatGetStreamCodecpar(inCtx, i);

if (!inParI) {

outStreamIndexByInIndex[i] = -1;

continue;

}

const outStCopy = await window.ffmpeg.avFormatNewStream(outCtx, 0n);

if (!outStCopy) {

throw new Error(`avFormatNewStream(copy) failed at i=${i}`);

}

const outParCopy = await window.ffmpeg.avStreamGetCodecpar(outStCopy);

if (!outParCopy) {

throw new Error(`avStreamGetCodecpar(copy) failed at i=${i}`);

}

const cp = await window.ffmpeg.avcodecParametersCopy(outParCopy, inParI);

if (cp < 0) {

throw new Error(`avcodecParametersCopy failed at i=${i}: ${cp}`);

}

await window.ffmpeg.avCodecParametersSetCodecTag(outParCopy, 0);

const inTb = await window.ffmpeg.AVStream_time_base_get(inSt);

if (inTb && Number(inTb.den) !== 0) {

await window.ffmpeg.avStreamSetTimeBase(outStCopy, Number(inTb.num), Number(inTb.den));

}

const outIdx = Number(await window.ffmpeg.AVStream_index_get(outStCopy));

outStreamByInIndex[i] = outStCopy;

outStreamIndexByInIndex[i] = outIdx;

}

const needsFile = await window.ffmpeg.avFormatOutputNeedsFile(outCtx);

if (needsFile) {

const ioRet = await window.ffmpeg.avFormatAvioOpen2(outCtx, outPath, 2, null);

p1Log(`avFormatAvioOpen2 ret=${ioRet}`);

if (ioRet < 0) return;

}

const wh = await window.ffmpeg.avFormatWriteHeader(outCtx, null);

if (wh === -1094995529) {

p1Log(`avFormatWriteHeader ret=${wh} (AVERROR_INVALIDDATA)`);

} else {

p1Log(`avFormatWriteHeader ret=${wh}`);

}

if (wh < 0) return;

inPkt = await window.ffmpeg.avPacketAlloc();

outPkt = await window.ffmpeg.avPacketAlloc();

frame = await window.ffmpeg.avFrameAlloc();

if (!inPkt || !outPkt || !frame) {

p1Log('Error: alloc inPkt/outPkt/frame failed');

return;

}

const encTb = await window.ffmpeg.AVCodecContext_time_base_get(encCtx);

const outTb = await window.ffmpeg.AVStream_time_base_get(outSt);

let srcNum = encTb ? Number(encTb.num) : 0;

let srcDen = encTb ? Number(encTb.den) : 0;

const dstNum = outTb ? Number(outTb.num) : 0;

const dstDen = outTb ? Number(outTb.den) : 0;

p1Log(`enc time_base=${srcNum}/${srcDen} out time_base=${dstNum}/${dstDen}`);

if (!(srcNum > 0 && srcDen > 0) && sr > 0) {

srcNum = 1;

srcDen = sr;

}

let nextPtsSamples = 0;

let wrotePackets = 0;

async function drainEncoder(limitPackets) {

for (let i = 0; i < limitPackets; i++) {

const rp = await window.ffmpeg.avcodecReceivePacket(encCtx, outPkt);

if (rp !== 0) return rp;

if (wrotePackets < 8) {

try {

const before = {

pts: await window.ffmpeg.getPacketPts(outPkt),

dts: await window.ffmpeg.getPacketDts(outPkt),

dur: await window.ffmpeg.getPacketDuration(outPkt),

size: await window.ffmpeg.getPacketSize(outPkt),

};

p1Log(`enc pkt(before rescale) ${JSON.stringify(before)}`);

} catch (_) {}

}

if (srcDen && dstDen) {

await window.ffmpeg.avPacketRescaleTs(outPkt, srcNum, srcDen, dstNum, dstDen);

}

if (outStreamIndex >= 0) {

await window.ffmpeg.setPacketStreamIndex(outPkt, outStreamIndex);

}

await window.ffmpeg.setPacketPos(outPkt, -1);

if (wrotePackets < 8) {

try {

const after = {

pts: await window.ffmpeg.getPacketPts(outPkt),

dts: await window.ffmpeg.getPacketDts(outPkt),

dur: await window.ffmpeg.getPacketDuration(outPkt),

};

p1Log(`enc pkt(after rescale) ${JSON.stringify(after)}`);

} catch (_) {}

}

const wr = await window.ffmpeg.avInterleavedWriteFrame(outCtx, outPkt);

await window.ffmpeg.avPacketUnref(outPkt);

if (wr < 0) {

p1Log(`avInterleavedWriteFrame ret=${wr}`);

throw new Error(`avInterleavedWriteFrame failed: ${wr}`);

}

wrotePackets++;

}

return -11;

}

let sentFrames = 0;

let copiedPackets = 0;

for (let n = 0; n < 400000; n++) {

const rr = await window.ffmpeg.avReadFrame(inCtx, inPkt);

if (rr < 0) break;

const sidx = await window.ffmpeg.getPacketStreamIndex(inPkt);

if (Number(sidx) === Number(bestIdx)) {

const sp = await window.ffmpeg.avcodecSendPacket(decCtx, inPkt);

await window.ffmpeg.avPacketUnref(inPkt);

if (sp < 0) {

p1Log(`dec avcodecSendPacket ret=${sp}`);

return;

}

for (;;) {

const rf = await window.ffmpeg.avcodecReceiveFrame(decCtx, frame);

if (rf !== 0) break;

let nbSamples = 0;

try {

nbSamples = Number(await window.ffmpeg.getFrameNbSamples(frame));

} catch (_) {}

if (!sr) {

try {

sr = Number(await window.ffmpeg.getFrameSampleRate(frame));

} catch (_) {}

}

try {

await window.ffmpeg.setFramePts(frame, nextPtsSamples);

} catch (_) {}

if (nbSamples > 0) {

nextPtsSamples += nbSamples;

} else {

nextPtsSamples += 1024;

}

for (;;) {

const sf = await window.ffmpeg.avcodecSendFrame(encCtx, frame);

if (sf === -11) {

await drainEncoder(64);

continue;

}

await window.ffmpeg.avFrameUnref(frame);

if (sf < 0) {

p1Log(`enc avcodecSendFrame ret=${sf}`);

return;

}

break;

}

await drainEncoder(64);

sentFrames++;

}

continue;

}

const outMappedIndex = outStreamIndexByInIndex[Number(sidx)];

const outMappedStream = outStreamByInIndex[Number(sidx)];

if (typeof outMappedIndex !== 'number' || outMappedIndex < 0 || !outMappedStream) {

await window.ffmpeg.avPacketUnref(inPkt);

continue;

}

const inSt = await window.ffmpeg.avFormatGetStream(inCtx, Number(sidx));

const inTb = inSt ? await window.ffmpeg.AVStream_time_base_get(inSt) : null;

const outTb2 = outMappedStream ? await window.ffmpeg.AVStream_time_base_get(outMappedStream) : null;

if (inTb && outTb2 && Number(inTb.den) !== 0 && Number(outTb2.den) !== 0) {

await window.ffmpeg.avPacketRescaleTs(

inPkt,

Number(inTb.num),

Number(inTb.den),

Number(outTb2.num),

Number(outTb2.den)

);

}

await window.ffmpeg.setPacketStreamIndex(inPkt, outMappedIndex);

await window.ffmpeg.setPacketPos(inPkt, -1);

const wr = await window.ffmpeg.avInterleavedWriteFrame(outCtx, inPkt);

if (wr < 0) {

throw new Error(`avInterleavedWriteFrame(copy) failed: ${wr}`);

}

copiedPackets++;

await window.ffmpeg.avPacketUnref(inPkt);

}

p1Log(`sentFrames=${sentFrames} copiedPackets=${copiedPackets}`);

{

const sp = await window.ffmpeg.avcodecSendPacket(decCtx, 0n);

if (sp < 0) {

p1Log(`dec avcodecSendPacket(null flush) ret=${sp}`);

} else {

for (;;) {

const rf = await window.ffmpeg.avcodecReceiveFrame(decCtx, frame);

if (rf !== 0) break;

let nbSamples = 0;

try {

nbSamples = Number(await window.ffmpeg.getFrameNbSamples(frame));

} catch (_) {}

try {

await window.ffmpeg.setFramePts(frame, nextPtsSamples);

} catch (_) {}

if (nbSamples > 0) {

nextPtsSamples += nbSamples;

} else {

nextPtsSamples += 1024;

}

for (;;) {

const sf = await window.ffmpeg.avcodecSendFrame(encCtx, frame);

if (sf === -11) {

await drainEncoder(64);

continue;

}

await window.ffmpeg.avFrameUnref(frame);

break;

}

await drainEncoder(64);

}

}

}

{

for (;;) {

const sf = await window.ffmpeg.avcodecSendFrame(encCtx, 0n);

if (sf === -11) {

await drainEncoder(64);

continue;

}

p1Log(`avcodecSendFrame(null flush) ret=${sf}`);

break;

}

}

await drainEncoder(8192);

p1Log(`wrotePackets=${wrotePackets}`);

const tr = await window.ffmpeg.av_write_trailer(outCtx);

p1Log(`av_write_trailer ret=${tr}`);

try {

const probe = await window.ffmpeg.probeMedia(outPath);

p1Log(`probeMedia: ${typeof probe === 'string' ? probe : JSON.stringify(probe)}`);

} catch (e) {

p1Log(`probeMedia error: ${e && e.message ? e.message : String(e)}`);

}

} catch (e) {

p1Log(`Error: ${e && e.message ? e.message : String(e)}`);

} finally {

try { if (inPkt) await window.ffmpeg.avPacketFree(inPkt); } catch (_) {}

try { if (outPkt) await window.ffmpeg.avPacketFree(outPkt); } catch (_) {}

try { if (frame) await window.ffmpeg.avFrameFree(frame); } catch (_) {}

try { if (encCtx) await window.ffmpeg.avcodecFreeContext(encCtx); } catch (_) {}

try { if (decCtx) await window.ffmpeg.avcodecFreeContext(decCtx); } catch (_) {}

try { if (outCtx) await window.ffmpeg.avFormatAvioClosep(outCtx); } catch (_) {}

try { if (outCtx) await window.ffmpeg.avFormatFreeContext(outCtx); } catch (_) {}

try { if (inCtx) await window.ffmpeg.avFormatCloseInput(inCtx); } catch (_) {}

}

}

该函数包含:

drainEncoder():处理EAGAIN(-11)并写入 packetframe.pts连续生成(按 samples 累加)- 其它流

codecpar复制 +avPacketRescaleTs+avInterleavedWriteFrame(保留画面) probeMedia(outPath):编码完成后自动输出 duration/streams 结果

7. 复现与验证步骤(面向使用者)

- 打开应用界面,点击 Choose File 选择一个带视频+音频的媒体文件

- 点击 选择输出目录 选择输出目录

- 点击 Encode Smoke (P2)

- 观察日志中:

sentFrames=... copiedPackets=...(copiedPackets 很大意味着视频包写入)probeMedia: ... durationUs ... nbStreams ...(duration 应接近原片时长,nbStreams 至少包含 video+audio)

8. 经验总结(可迁移到其它转码链路)

- 编码器/解码器的

EAGAIN必须通过 drain 消化,不能当成“直接失败” - 时长问题优先看 PTS/DTS 与 time_base:音频可用 samples 生成连续 PTS,稳定且通用

- “没画面”通常不是播放器问题,是输出没写视频流:要么一起转,要么至少 remux 复制进去

- 任何改动都应该用

probeMedia/ffprobe做闭环验证,而不是只凭播放器 UI - 如果需要示例代码,可以私信获取

更多推荐

已为社区贡献6条内容

已为社区贡献6条内容

所有评论(0)